Introduction

Scaling refers to expanding an application or system to handle increases in traffic, data, or demand. As usage grows, scaling helps maintain performance, availability and reliability.

In this guide, we’ll explore common scaling approaches like vertical, horizontal, and microservice scaling. You’ll learn scaling best practices around load balancing, databases, caching, and monitoring.

By understanding core scaling concepts, beginners can build more scalable systems as usage increases. Let’s get started with scaling basics!

Why Scale Applications?

Scaling becomes necessary when:

- Traffic surges – Spikes in visitors degrade app performance

- Data grows – Increased data slows down database and app responsiveness

- New features added – New capabilities impact app efficiency and complexity

- New platforms added – Supporting more platforms places higher loads

Without scaling, users will encounter slow speeds, outages, and reliability issues. Scaling ensures quality of service even during peak demand.

Vertical vs Horizontal Scaling

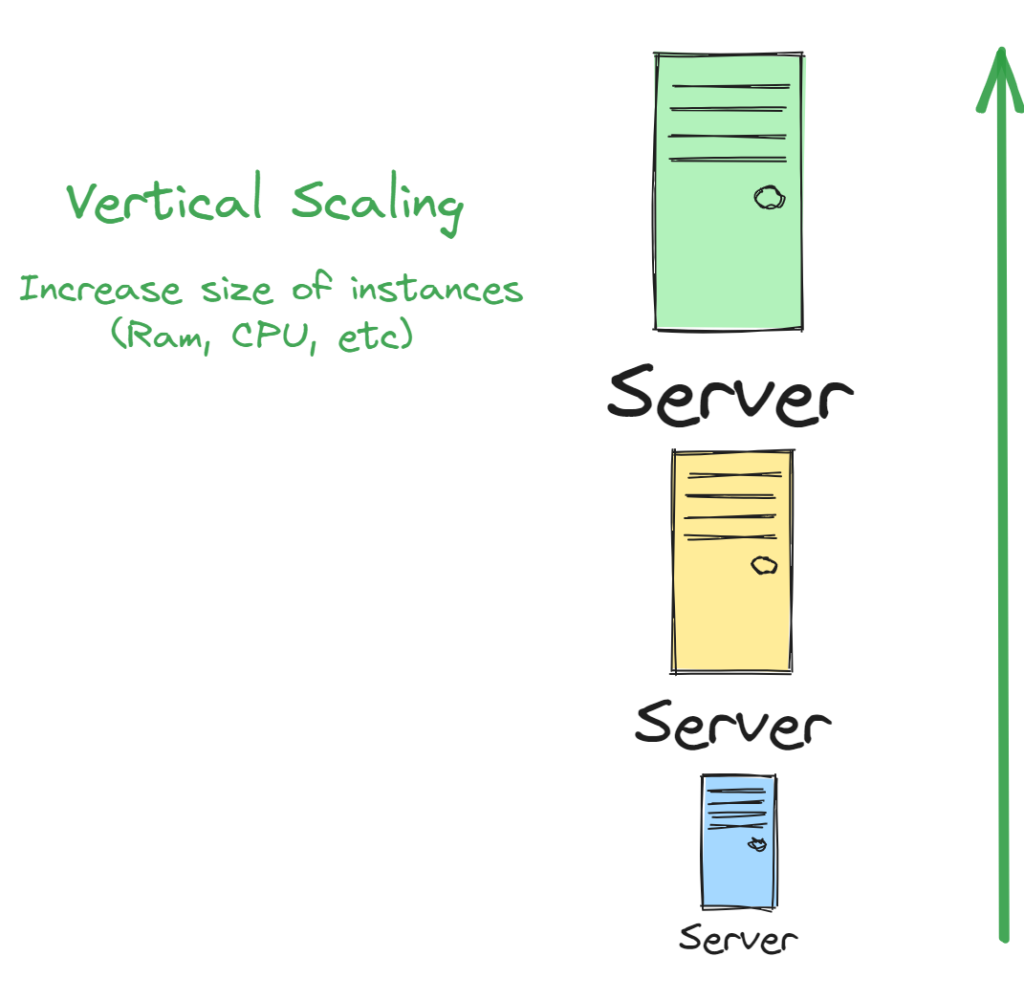

Vertical Scaling: Reaching New Heights

Vertical scaling, often referred to as “scaling up,” involves enhancing the capacity of an existing server or machine. Picture it as adding more horsepower to your vehicle’s engine. Here’s how vertical scaling works:

- Upgrading Hardware: In vertical scaling, you invest in more powerful hardware components, such as increasing CPU speed, adding more RAM, or expanding storage capacity. This process makes your existing server more robust.

- Single Point of Focus: Vertical scaling focuses on a single machine or server. It allows you to maximize the performance of that machine and is suitable for applications with high resource requirements.

- Simplicity: Scaling vertically is relatively straightforward. You upgrade the server’s components, and it’s ready to handle more load.

However, vertical scaling has limitations:

- Cost: High-end hardware components can be expensive.

- Scalability Ceiling: Eventually, you reach a point where further upgrades are not feasible or cost-effective.

- Single Point of Failure: Relying on a single machine can pose risks in terms of redundancy and fault tolerance.

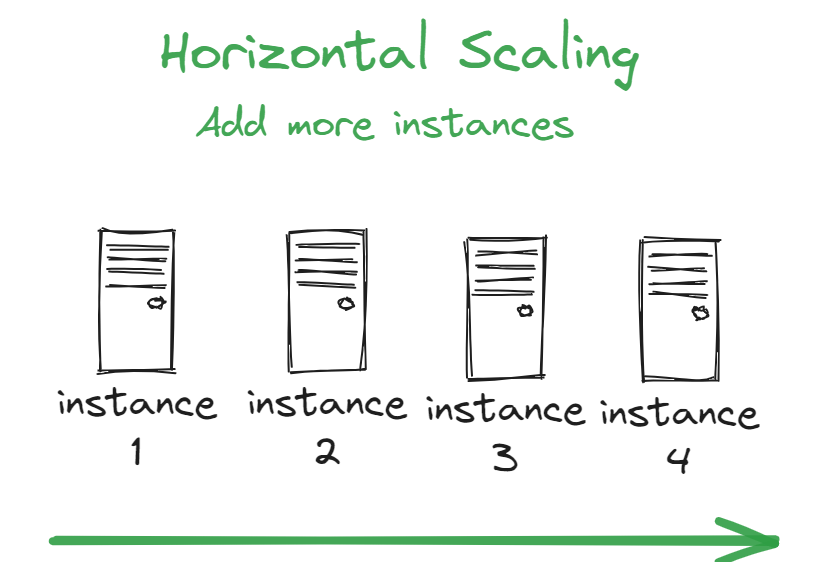

Horizontal Scaling: Spreading the Load

Horizontal scaling, known as “scaling out,” involves adding more machines or servers to distribute the workload. Think of it as adding more lanes to a highway to accommodate more traffic. Here’s how horizontal scaling operates:

- Adding More Servers: Instead of upgrading a single server, you add more servers to your infrastructure. These servers work together to handle incoming requests.

- Load Balancing: A load balancer distributes incoming requests evenly among the servers. This ensures optimal resource utilization and prevents any single server from becoming a bottleneck.

- Flexibility: Horizontal scaling offers greater flexibility to adapt to changing workloads. You can add or remove servers as needed.

Horizontal scaling excels in several aspects:

- Cost-Efficiency: You can start with a small number of servers and expand gradually as demand grows, making it cost-effective.

- High Availability: Multiple servers provide redundancy, reducing the risk of downtime due to server failures.

- Scalability: Horizontal scaling can potentially handle infinite growth by adding more servers.

However, it comes with its own challenges:

- Complexity: Managing multiple servers and load balancing can be more complex than vertical scaling.

- Data Synchronization: Ensuring data consistency across distributed servers can be a challenge.

Vertical vs Horizontal Scaling in a Glance

| Aspect | Vertical Scaling | Horizontal Scaling |

|---|---|---|

| Definition | Enhancing the capacity of a | Adding more machines or servers |

| single server/machine. | to distribute the workload. | |

| Focus | Single, powerful machine. | Multiple, smaller machines. |

| Hardware Upgrade | Upgrading components (CPU, RAM, | Adding new servers to the |

| storage) of an existing server. | infrastructure. | |

| Load Distribution | All requests are handled by | Requests are distributed |

| one machine. | among multiple machines using | |

| load balancing. | ||

| Resource Utilization | Optimizes the performance of | Distributes the workload evenly |

| a single server. | among servers, preventing | |

| bottlenecks. | ||

| Cost | Can be costly, especially for | Cost-effective, as you can |

| high-end hardware upgrades. | start with a few servers. | |

| Scalability | Limited scalability. Further | Highly scalable. Can add more |

| upgrades may not be feasible. | servers to accommodate growth. | |

| Redundancy | Single point of failure. | Provides redundancy through |

| multiple servers, reducing risk | ||

| of downtime. | ||

| Complexity | Relatively simple to implement. | Requires managing multiple |

| servers and load balancing. | ||

| Data Synchronization | Easier data synchronization | Complex data synchronization |

| since it’s on a single server. | may be required across servers. | |

| Flexibility | Less flexible for handling | Offers flexibility to adjust |

| dynamic workloads. | to changing workloads. | |

| Scalability Ceiling | Eventually reaches a limit. | Potentially infinite scalability with the addition of servers. |

Stateless vs Stateful Applications

Stateless applications don’t store data or session state internally. They are easier to scale.

Stateful applications maintain client data within the app server instance. This requires session syncing to scale.

When possible, favor stateless designs for simpler scaling. But many apps require stateful aspects.

Load Balancing Strategies

Load balancers distribute incoming client requests across backend application servers:

- Round robin – Requests cycle through servers sequentially

- Least connections – Directs traffic to server with fewest open connections

- Source/IP hash – Requests from one source consistently sent to same backend

- Performance-based – Sends to fastest responding server

Combine strategies like round robin with additional logics based on overhead, latency, geography etc.

Caching Strategies

Caching improves speed and scaling by temporarily storing data in fast lookup stores:

- Page caching – Cache rendered web pages, API results, etc

- Object caching – Cache data objects from database queries

- Content delivery networks – Geographically distributed caches

Effective caching takes load off primary application and database servers.

Database Scaling Techniques

Scaling databases often becomes a bottleneck. Common database scaling strategies include:

- Read replicas – Use additional read-only copies for handling queries

- Data sharding – Split data across distributed database servers

- Master-slave replication – Replicate writeable master to read-only slaves

- Migrate to NoSQL – Adopt distributed NoSQL databases like MongoDB

Apply strategies based on data models, sharding schemes, and access patterns.

Microservices Architecture

Microservices break monolithic apps into independent modules – typically single responsibility services. This provides:

- Independent scaling – Scale specific services up/down as needed.

- Technology flexibility – Mix languages and frameworks per service.

- Fault isolation – If one service fails, only that piece goes down.

- Agile delivery – Services can be built, updated and deployed independently.

Microservices require more upfront work but enable more resilient growth.

Scaling Application Code

Application changes help utilize scaled resources effectively:

- Asynchronous processing – Queue work for background handling

- Stream processing – Sequentially process continuous data flows

- Stateless design – Avoid sticky user sessions

- Caching – Return cached data instead of recomputing

- Resource pooling – Reuse and limit expensive resources like DB connections

- Data pagination – Fetch partial result sets from DBs

Efficient code complements infrastructure scaling.

Monitoring for Scaling

Robust monitoring helps identify scaling bottlenecks:

- Application metrics – Requests, latencies, error rates

- Server metrics – Memory, CPU, disk, network I/O

- Database metrics – Connections, query throughput, replication lag

- Caching metrics – Hits, misses, evictions

- Tracing – Chase failures and hot spots across distributed apps

Monitor across all layers to guide scaling decisions.

Scaling Cloud vs On-Premise Environments

Cloud platforms like AWS easily scale through:

- Automatic vertical scaling of VMs

- Programmatically spinning up servers

- Serverless computing like AWS Lambda

- Managed data stores that auto-scale

On-premise scaling requires manual server capacity planning.

Common Scaling Pitfalls

Avoid common scaling mistakes like:

- Scaling prematurely without data-driven bottlenecks

- Neglecting small users when targeting large customers

- Breaking shared caches or databases by scaling out

- Ignoring data growth, not just traffic growth

- Failing to address increased complexity alongside scale

- Insufficient testing and simulation under load

- Lacking monitoring to pinpoint scaling opportunities

Scale intelligently based on current needs – not imagined future scale.

Conclusion

Scaling requires continual improvement to handle application growth gracefully. Mastering approaches like horizontal scaling, caching, microservices, and monitoring will enable scaling smoothly.

Remember to scale iteratively based on real usage data and metrics. With the right foundations, scaling won’t cause growing pains even for runaway success. Plan ahead to scale intelligently.

Frequently Asked Questions

Q: What are warning signs an application needs to be scaled?

A: Degraded performance, traffic spikes overwhelming capacity, database slowness, unavailability during peak loads, and infrastructure costs exceeding revenue.

Q: When does adopting microservices make sense vs scaling monoliths?

A: When monoliths become prohibitively complex and unreliable. Microservices simplify independent scaling.

Q: What are disadvantages of microservices?

A: Increased complexity around decomposition, network calls, duplication, and deployment orchestration. Monitor to avoid overly granular services.

Q: How can scaling be tested before deploying to production?

A: Load testing tools simulate real-world user traffic at scale. Feature flags allow testing with subsets of users.

Q: Does scaling always require significant rewrites?

A: Not necessarily – look for small wins first. Many apps can scale through infrastructure improvements only by leveraging cloud elasticity.

Q: How can scaling be automated?

A: Managed cloud providers allow policies for auto-scaling groups. Container orchestrators like Kubernetes also automate scaling cluster nodes.