Introduction:

Apache Kafka has emerged as a powerful and widely adopted distributed streaming platform for handling real-time data feeds. It provides a scalable, fault-tolerant, and highly available system to handle large volumes of data across various applications and services. In this article, we will explore Apache Kafka and demonstrate how to integrate it with Node.js to build robust and scalable data processing pipelines.

Understanding Apache Kafka:

A distributed streaming platform called Apache Kafka was created by LinkedIn. It is designed to handle high-throughput, fault-tolerant, and real-time data streams. Kafka follows a publish-subscribe model, where data is published to topics and consumed by subscribers. It ensures durability and fault tolerance by storing data in a distributed and replicated manner across multiple servers or clusters.

Key Concepts in Kafka:

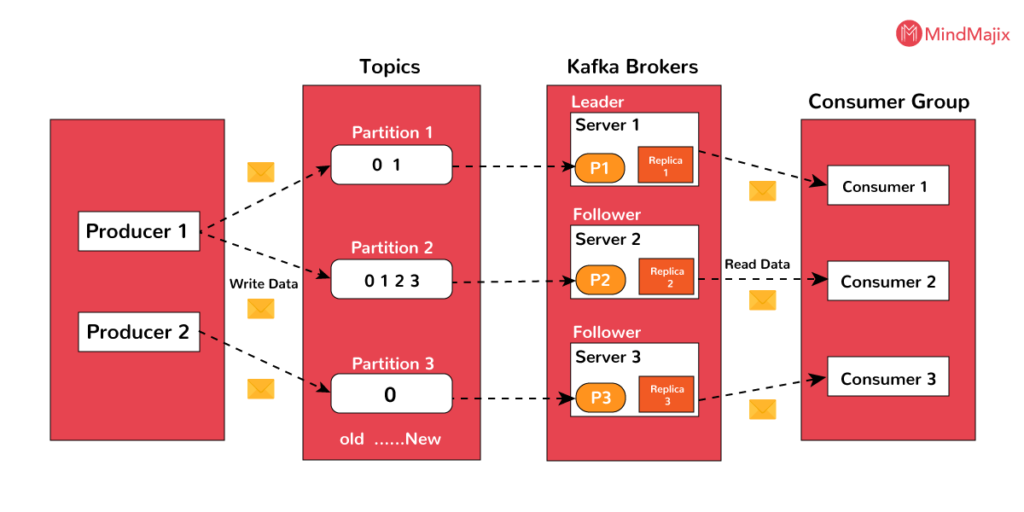

- Topics: Kafka organizes data streams into topics, which are identified by unique names. Producers write messages to specific topics, and consumers read messages from those topics.

- Producers: Publishers are in charge of posting messages to Kafka subjects. They can be any application or service that generates data streams.

- Consumers: Consumers read messages from Kafka topics and process them. They can be standalone applications or part of a larger data processing pipeline.

- Partitions: Topics in Kafka are divided into partitions, allowing parallel processing of data. Each partition is ordered and can be spread across different Kafka brokers for scalability.

- Brokers: Brokers are the Kafka servers that manage the storage and replication of topic partitions. They handle the incoming requests from producers and consumers.

Kafka Terminology:

How apache kafka works?

Apache Kafka operates on a distributed publish-subscribe messaging model, enabling high-throughput, fault-tolerant, and real-time data streaming. It consists of several key components and follows a specific flow of data. Let’s explore how Apache Kafka works:

- Topics and Partitions: Kafka organizes data into topics, which are identified by unique names. Topics represent data streams or categories to which producers publish messages. Each topic is divided into one or more partitions, which are ordered and sequentially numbered. Partitions allow parallel processing of data and enable horizontal scalability.

- Producers: Publishers are in charge of posting messages to Kafka subjects. They can be any application or service that generates data streams. When a producer sends a message, it can specify the target topic, and Kafka appends the message to one of the topic’s partitions. Producers can choose to send messages in different modes, such as synchronous or asynchronous, depending on their requirements.

- Brokers: Brokers are the Kafka servers that handle message storage and replication. Each Kafka broker is responsible for one or more partitions of one or more topics. It receives messages from producers, assigns offsets to them (unique identifiers within the partition), and writes them to the partition’s commit log. Brokers are distributed across multiple servers or clusters to provide fault tolerance and scalability.

- Replication: To ensure fault tolerance and data durability, Kafka employs replication. Each partition can have multiple replicas, with one replica designated as the leader and the others as followers. The leader replica handles all read and write operations for the partition, while followers replicate the leader’s data. If a leader fails, one of the followers is automatically elected as the new leader. Replication allows Kafka to continue functioning even if some brokers or replicas encounter issues.

- Consumers and Consumer Groups: Consumers read messages from Kafka topics. They can be standalone applications or part of a larger data processing pipeline. Consumers can subscribe to one or more topics and consume messages from specific partitions. Each message within a partition has a unique offset. Consumers keep track of the offsets they have consumed to ensure they don’t miss any messages.

Consumer groups enable parallel processing and load balancing. Consumers within a group coordinate to consume messages from different partitions. Kafka ensures that each message is consumed by only one consumer within a group, balancing the load across consumers. If new consumers join or leave the group, Kafka dynamically rebalances the partitions among the active consumers.

- Data Retention: Kafka retains messages for a configurable period, even after they have been consumed. This feature enables replayability and allows consumers to go back in time and reprocess messages. The retention period can be set based on time or storage size. Kafka can handle large volumes of data by efficiently storing and compressing messages on disk.

- Fault Tolerance and Scalability: Kafka’s distributed architecture provides fault tolerance and scalability. By replicating data across multiple brokers, Kafka ensures that data remains available even if some brokers fail. Additionally, Kafka allows horizontal scaling by adding more brokers to handle increased data throughput. The use of partitions and consumer groups enables parallel processing and efficient utilization of resources.

Overall, Apache Kafka’s design and architecture make it an ideal choice for building data-intensive, real-time applications and systems. It provides a reliable, scalable, and fault-tolerant platform for handling large-scale data streaming and integration requirements.

Integrating Apache Kafka with Node.js:

To integrate Apache Kafka with Node.js, we can make use of the kafkajs library. It is a modern, feature-rich Kafka client for Node.js that provides a high-level API for producing and consuming messages.

To get started, we need to install the kafkajs library using npm:

npm install kafkajsExample: Producing and Consuming Messages with Node.js and Kafka: Let’s walk through a simple example that demonstrates how to produce and consume messages using Kafka and Node.js.

const { Kafka } = require('kafkajs');

const kafka = new Kafka({

clientId: 'my-app',

brokers: ['localhost:9092'],

});

const producer = kafka.producer();

const consumer = kafka.consumer({ groupId: 'my-group' });

const run = async () => {

await producer.connect();

await consumer.connect();

await producer.send({

topic: 'my-topic',

messages: [{ value: 'Hello, Kafka!' }],

});

await consumer.subscribe({ topic: 'my-topic', fromBeginning: true });

await consumer.run({

eachMessage: async ({ topic, partition, message }) => {

console.log(`Received message: ${message.value} from topic ${topic}`);

},

});

};

run().catch(console.error);

In the above example, we create a Kafka client using the Kafka class from kafkajs. We initialize a producer and consumer, connect to the Kafka brokers, and then use them to produce and consume messages.

The producer sends a message with the value ‘Hello, Kafka!’ to the ‘my-topic’ topic. The consumer subscribes to the same topic and receives messages using the eachMessage callback.

Conclusion:

Apache Kafka is a highly scalable and fault-tolerant streaming platform that enables real-time data integration and processing. By leveraging the kafkajs library, Node.js developers can easily integrate Kafka into their applications and build robust, scalable data pipelines. This article provided an overview of Apache Kafka’s key concepts and demonstrated how to produce and consume messages using Kafka and Node.js. With Kafka and Node.js, developers can harness the power of real-time data streams to build advanced applications and systems.